Years ago I started writing Graphene as a small library of 3D transformation-related math types to be used by GTK (and possibly Clutter, even if that didn’t pan out until Georges started working on the Clutter fork inside Mutter).

Graphene’s only requirement is a C99 compiler and a decent toolchain capable of either taking SSE builtins or support vectorization on appropriately aligned types. This means that, unless you decide to enable the GObject types for each Graphene type, Graphene doesn’t really need GLib types or API—except that’s a bit of a lie.

As I wanted to test what I was doing, Graphene has an optional build time dependency on GLib for its test suite; the library itself may not use anything from GLib, but if you want to build and run the test suite then you need to have GLib installed.

This build time dependency makes testing Graphene on Windows a lot more complicated than it ought to be. For instance, I need to install a ton of packages when using the MSYS2 toolchain on the CI instance on AppVeyor, which takes roughly 6 minutes each for the 32bit and the 64bit builds; and I can’t build the test suite at all when using MSVC, because then I’d have to download and build GLib as well—and just to access the GTest API, which I don’t even like.

What’s wrong with GTest

GTest is kind of problematic—outside of Google hijacking the

name of the API for their own testing framework, which makes looking for it

a pain. GTest is a lot more complicated than a small unit testing API

needs to be, for starters; it was originally written to be used with a

specific harness, gtester, in order to generate a very brief

HTML report using gtester-report, including some

timing information on each unit—except that gtester is now deprecated

because the build system gunk to make it work was terrible to deal with. So,

we pretty much told everyone to stop bothering, add a --tap argument when

calling every test binary, and use the TAP harness in Autotools.

Of course, this means that the testing framework now has a completely useless output format, and with it, a bunch of default behaviours driven by said useless output format, and we’re still deciding if we should break backward compatibility to ensure that the supported output format has a sane default behaviour.

On top of that, GTest piggybacks on GLib’s own assertion mechanism, which has two major downsides:

- it can be disabled at compile time by defining

G_DISABLE_ASSERTbefore includingglib.h, which, surprise, people tend to use when releasing; thus, you can’t run tests on builds that would most benefit from a test suite - it literally

abort()s the test unit, which breaks any test harness in existence that does not expect things toSIGABRTmidway through a test suite—which includes GLib’s own deprecatedgtesterharness

To solve the first problem we added a lot of wrappers around g_assert(),

like g_assert_true() and g_assert_no_error(), that won’t be disabled

depending on your build options and thus won’t break your test suite—and if

your test suite is still using g_assert(), you’re strongly encouraged to

port to the newer API. The second issue is still standing, and makes running

GTest-based test suite under any harness a pain, but especially under a

TAP harness, which requires listing the amount of tests you’ve run, or that

you’re planning to run.

The remaining issues of GTest are the convoluted way to add tests using a unique path; the bizarre pattern matching API for warnings and errors; the whole sub-process API that relaunches the test binary and calls a single test unit in order to allow it to assert safely and capture its output. It’s very much the GLib test suite, except when it tries to use non-GLib API internally, like the command line option parser, or its own logging primitives; it’s also sorely lacking in the GObject/GIO side of things, so you can’t use standard API to create a mock GObject type, or a mock GFile.

If you want to contribute to GLib, then working on improving the GTest API would be a good investment of your time; since my project does not depend on GLib, though, I had the chance of starting with a clean slate.

A clean slate

For the last couple of years I’ve been playing off and on with a small test framework API, mostly inspired by BDD frameworks like Mocha and Jasmine. Behaviour Driven Development is kind of a buzzword, like test driven development, but I particularly like the idea of describing a test suite in terms of specifications and expectations: you specify what a piece of code does, and you match results to your expectations.

The API for describing the test suites is modelled on natural language (assuming your language is English, sadly):

describe("your data type", function() {

it("does something", () => {

expect(doSomething()).toBe(true);

});

it("can greet you", () => {

let greeting = getHelloWorld();

expect(greeting).not.toBe("Goodbye World");

});

});

Of course, C is more verbose that JavaScript, but we can adopt a similar mechanism:

static void

something (void)

{

expect ("doSomething",

bool_value (do_something ()),

to_be, true,

NULL);

}

static void

{

const char *greeting = get_hello_world ();

expect ("getHelloWorld",

string_value (greeting),

not, to_be, "Goodbye World",

NULL);

}

static void

type_suite (void)

{

it ("does something", do_something);

it ("can greet you", greet);

}

…

describe ("your data type", type_suite);

…

If only C11 got blocks from Clang, this would look a lot less clunkier.

The value wrappers are also necessary, because C is only type safe as long as every type you have is an integer.

Since we’re good C citizens, we should namespace the API, which requires naming this library—let’s call it µTest, in a fit of unoriginality.

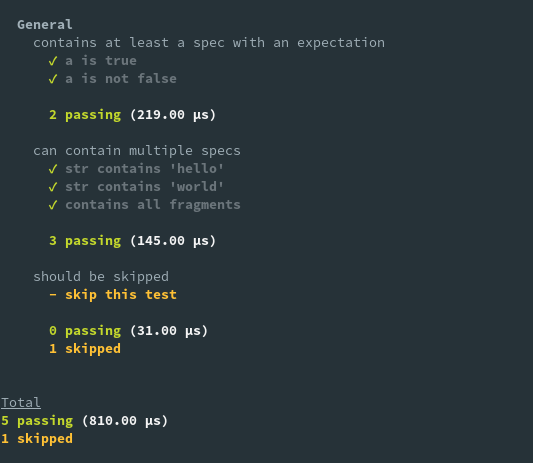

One of the nice bits of Mocha and Jasmine is the output of running a test suite:

$ ./tests/general

General

contains at least a spec with an expectation

✓ a is true

✓ a is not false

2 passing (219.00 µs)

can contain multiple specs

✓ str contains 'hello'

✓ str contains 'world'

✓ contains all fragments

3 passing (145.00 µs)

should be skipped

- skip this test

0 passing (31.00 µs)

1 skipped

Total

5 passing (810.00 µs)

1 skipped

Or, with colors:

Using colors means immediately taking this more seriously

The colours go automatically away if you redirect the output to something that is not a TTY, so your logs won’t be messed up by escape sequences.

If you have a test harness, then you can use the MUTEST_OUTPUT environment

variable to control the output; for instance, if you’re using TAP you’ll get:

$ MUTEST_OUTPUT=tap ./tests/general

# General

# contains at least a spec with an expectation

ok 1 a is true

ok 2 a is not false

# can contain multiple specs

ok 3 str contains 'hello'

ok 4 str contains 'world'

ok 5 contains all fragments

# should be skipped

ok 6 # skip: skip this test

1..6

Which can be passed through to prove to get:

$ MUTEST_OUTPUT=tap prove ./tests/general

./tests/general .. ok

All tests successful.

Files=1, Tests=6, 0 wallclock secs ( 0.02 usr + 0.00 sys = 0.02 CPU)

Result: PASS

I’m planning to add some additional output formatters, like JSON and XML.

Using µTest

Ideally, µTest should be used as a sub-module or a Meson sub-project of your own; if you’re using it as a sub-project, you can tell Meson to build a static library that won’t get installed on your system, e.g.:

mutest_dep = dependency('mutest-1',

fallback: [ 'mutest', 'mutest_dep' ],

default_options: ['static=true'],

required: false,

disabler: true,

)

# Or, if you're using Meson < 0.49.0

mutest_dep = dependency('mutest-1', required: false)

if not mutest_dep.found()

mutest = subproject('mutest',

default_options: [ 'static=true', ],

required: false,

)

if mutest.found()

mutest_dep = mutest.get_variable('mutest_dep')

else

mutest_dep = disabler()

endif

endif

Then you can make the tests conditional on mutest_dep.found().

µTest is kind of experimental, and I’m still breaking its API in places, as a result of documenting it and trying it out, by porting the Graphene test suite to it. There’s still a bunch of API that I’d like to land, like custom matchers/formatters for complex data types, and a decent want to skip a specification or a whole suite; plus, as I said above, some additional formatted output.

If you have feedback, feel free to open an issue—or a pull request wink wink nudge nudge.